HTML5 appears to have a number of benefits for consumers and car manufacturers. But what is often good for the goose is not necessarily good for the developer. Talking to the guys in the trenches is critical to understanding the true viability of HTML5.

Andy Gryc and Sheridan Ethier, manager of the automotive development team at QNX, pair up for a technical discussion on HTML5. They explore whether this new technology can support rich user interfaces, how HTML5 apps can be blended with apps written in OpenGL, and if interprocess communication can be implemented between native and web-based applications.

So without further ado, here’s the latest in the educational series of HTML5 videos from QNX.

This interview of Sheridan Ethier is the third in a series from QNX on HTML5.

Tuesday, December 27, 2011

HTML5 and the software engineer

Labels:

Connected Car,

HMIs,

HTML5,

Infotainment,

Mobile connectivity,

Nancy Young,

Navigation,

QNX CAR,

QNX OS,

Smartphones,

Telematics

Monday, December 19, 2011

Is HTML5 a good gamble?

As the consumer and automotive worlds continue to collide, HTML5 looks like a good bet. And not a long shot either. In fact, the odds are all automakers will eventually use it. But since the standard won’t be mature for some time yet, should you take a chance on it now?

To answer this, Andy Gryc talks to Matthew Staikos of RIM. Matthew is the manager of the browser and web platform group at RIM, and has over 10 years of software development experience with a strong focus on WebKit for mobile and embedded systems. Matthew co-founded Torch Mobile, which was acquired by RIM for their browser technology.

Andy’s conversation with Matthew is the subject of the following video, the second in an educational series designed to get an industry-wide perspective on HTML5.

This interview of Matthew Staikos is the second in a series from QNX on HTML5.

Labels:

Cloud connectivity,

Connected Car,

HMIs,

HTML5,

Infotainment,

Mobile connectivity,

Nancy Young,

QNX CAR,

QNX OS,

Smartphones,

Telematics

Tuesday, December 13, 2011

What’s HTML5 got to do with automotive?

There’s been a lot of noise lately about HTML5. A September 2011 report by binvisions shows that search engines and social media web sites are leading the way toward adoption: Google, Facebook, YouTube, Wikipedia, Twitter, and plenty more have already transitioned to HTML5. Some are taking it even further: Facebook has an HTML5 Resource Center for developers and the Financial Times has a mobile HTML5 version of their website.

It won’t be long before HTML5 is ubiquitous. We think automakers should (and will) use it.

To elucidate the technology and its relevance, we’ve created a series of educational videos on the topic. Here is the first in that series. Interviews with partners, customers, and industry gurus will soon follow.

This simple overview is the first in a series from QNX on HTML5. (Personally I like the ending the best.)

Labels:

Cloud connectivity,

Connected Car,

HMIs,

HTML5,

Infotainment,

Mobile connectivity,

Nancy Young,

Navigation,

QNX CAR,

QNX OS,

Smartphones,

Telematics

Thursday, December 8, 2011

Seamless connectivity is for more than online junkies

As much as I’m not always enamored with sitting behind a computer all day, I find being off the grid annoying. Remember this email joke?

Even though this joke circulated several years ago, it still strikes a chord. The big difference now is that there’s no longer a subculture of ‘online junkies.’ From the time we wake up in the morning to the time we go to bed, we all want to be connected — and that includes when we get behind the wheel. So to this joke I would add:

At QNX, we’re working toward a seamless experience where people can enjoy the same connectivity whether they’re texting their spouse from the mall or checking traffic reports while driving down the highway. See what I mean:

For more information about the technology described in this video, visit the QNX website.

- You know you’re an online junkie when you:

- wake up at 3:00 am to go to the bathroom and stop to check your email on the way back to bed

- rarely communicate with your mother because she doesn’t have email

- check your inbox. It says ‘no new messages,’ so you check it again

Even though this joke circulated several years ago, it still strikes a chord. The big difference now is that there’s no longer a subculture of ‘online junkies.’ From the time we wake up in the morning to the time we go to bed, we all want to be connected — and that includes when we get behind the wheel. So to this joke I would add:

- resent driving because it means going off the grid

At QNX, we’re working toward a seamless experience where people can enjoy the same connectivity whether they’re texting their spouse from the mall or checking traffic reports while driving down the highway. See what I mean:

For more information about the technology described in this video, visit the QNX website.

Labels:

Cloud connectivity,

Connected Car,

Driver distraction,

HTML5,

Infotainment,

Mobile connectivity,

Nancy Young,

Spatial auditory displays

Tuesday, December 6, 2011

Video: The secret to making hands-free noise-free

Explaining a highly technical product to a broad audience is tough. To succeed, you must reach out to people on their own terms, without being condescending. Most people love a good explanation, but everyone hates being talked down to.

Case in point: The QNX Acoustic Processing Suite. This software runs in millions of cars and offers a benefit that everyone can relate to: clear, rich, easy-to-understand hands-free calls. But once you start explaining how the suite does this, it's easy to get mired in technical jargon and to forget the bigger picture — something that even a technical audience wants to see.

So we dropped the jargon and opted for a creative approach. It involves a marching band, a rock guitarist, and, for good measure, an electric fan with a really long extension cord. Seriously.

Intrigued yet? Well, then, grab some popcorn and dim the lights:

Interested in learning more about this technology? Check out the acoustic processing page on the QNX website.

BTW, companies that use the QNX Acoustic Processing Suite in their products include OnStar, whose FMV aftermarket mirror recently won a CES Innovations Design and Engineering Award.

Posted by Paul Leroux

Brand exposure

QNX has a knack for turning up where I least expect it. Sometimes, I'm even surprised by how our technology partners use it — and I head up strategic alliances at QNX Software Systems. In this case, at least, I can claim ignorance based on distance.

QNX has a knack for turning up where I least expect it. Sometimes, I'm even surprised by how our technology partners use it — and I head up strategic alliances at QNX Software Systems. In this case, at least, I can claim ignorance based on distance. Last week, Tokyo Weekender published a story on Freescale Japan from the perspective of David Uze, the company’s president. Anyone who has had the pleasure of meeting David knows he’s super passionate about his mission. And arguably, it must be a challenging one, for the market in Japan has a long history of being dominated by national silicon suppliers. But, from an automotive perspective, recent consolidations of some of those suppliers, along with the trend towards standardized architectures, have opened the door for companies like Freescale.

The article talks about Freescale’s planned expansion in Japan, recovery from the earthquake, the advantages of being a global company, and the Freescale Cup, a robotics competition for university students that will be launched in Japan next year.

This, for me, is David’s most interesting comment:

- “The reason I believe we must focus on Japan is because it is the most macro-economically focused culture in the world. Japan is the only country I know of where companies routinely create 50 -year plans to ensure they are a strong economic force in the long term.”

I find this incredible, especially if it applies to high-tech companies. Most would struggle with a concise 5-year plan, let alone 50!

But back to QNX. If you look at the article's opening photo (see below), you'll a car emblazoned with the logos of several Freescale suppliers, including a QNX logo that appears right below the Freescale wordmark. The QNX logo also appears in another photo, on a banner hanging above the head of Freescale CEO Rich Breyers, as he addresses the crowd at an FTF event.

Talk about international brand exposure! Thanks, David!

Wednesday, November 30, 2011

Pimp your ride with augmented reality — Part II

Last week, I introduced you to some cool examples of augmented reality, or AR, and stated that AR can help drivers deal with the burgeoning amount of information in the car.

Last week, I introduced you to some cool examples of augmented reality, or AR, and stated that AR can help drivers deal with the burgeoning amount of information in the car. Now that we’ve covered the basics, let’s look at some use-cases for both drivers and passengers. Remember, though, that these examples are just a taste — the possibilities for integrating AR into the car are virtually endless.

AR for the driver

When it comes to drivers, AR will focus on providing information while reducing distraction. Already, some vehicles use AR to overlay the vehicle trajectory onto a backup camera display, allowing the driver to gauge where the car is headed. Some luxury cars go one step further and overlay lane markings or hazards in the vehicle display.

Expect even more functionality in the future. In the case of a backup camera, the display might take advantage of 3D technology, allowing you to see, for example, that a skateboard is closer than the post you are backing towards. And then there is GM's prototype heads-up system, which, in dark or foggy conditions, can project lane edges onto the windshield or highlight people crossing the road up ahead:

AR can be extremely powerful while keeping distraction to a minimum. Take destination search, for example. You could issue the verbal command, “Take me to a Starbucks on my route. I want to see their cool AR cups”. The nav system could then overlay a subtle route guidance over the road with a small Starbucks logo that gets bigger as you approach your destination. The logo could then hover over the building when you arrive.

You'll no longer have to wonder if your destination is on the right or left, or if your nav system is correct when it says, “You have arrived at your destination.” The answer will be right in front of you.

AR for the passenger

So what about the passenger? Well, you could easily apply AR to side windows and allow passengers to learn more about the world around them, a la Wikitude. Take, for example, this recent video from Toyota, which represents one of the best examples of how AR could make long road trips less tedious and more enjoyable:

Labels:

Andrew Poliak,

Augmented reality,

Connected Car,

Driver distraction,

HMIs,

Speech interfaces

Tuesday, November 29, 2011

QNX-based nav system helps Ford SUVs stay on course down under

|

| Paul Leroux |

To reduce driver distraction, the system offers a simplified user interface and feature set. And, to provide accurate route guidance, the system uses data from an internal gyroscope and an external traffic message channel, as well as standard GPS signals. Taking the conditions of local roads into account, the software provides a variety of alerts and speed-camera warnings; it also offers route guidance in Australian English.

The navigation system is based on the iGO My way Engine, which runs in millions of navigation devices worldwide. To read NNG's press release, click here.

SWSA's new nav system for the Ford Territory is based on the Freescale

i.MX31L processor, QNX Neutrino RTOS, and iGO My way Engine.

Labels:

ARM-based devices,

Ford,

HMIs,

Infotainment,

Navigation,

Paul Leroux,

QNX OS

Sunday, November 27, 2011

QNX-powered OnStar FMV drives home with CES Innovation award

|

| Paul Leroux |

To clinch this award, a product must impress an independent panel of industrial designers, engineers, and trade journalists. Speaking of impressions, it seems that OnStar FMV also made a hit with the folks at CNET, because they've chosen it as one of their Top Holiday Shopping Picks for 2011.

As you may have guessed, OnStar FMV uses QNX Neutrino as its OS platform. It also uses the QNX acoustic processing suite, which filters out noise and echo to make hands-free conversations clear and easy to follow. The suite includes cool features like bandwidth extension, which extends the narrow-band hands-free signal frequency range to deliver speech that is warm and natural, as well as intelligible.

Have time for a video? If so, here's a fun look at FMV's features, including stolen vehicle recovery, automatic crash response, turn-by-turn navigation, hands-free calling, and one-touch emergency calling:

Labels:

Connected Car,

Hands-free systems,

OnStar,

Paul Leroux,

Telematics

Tuesday, November 22, 2011

Listen to the music

The audio CD is on its last track... Internet radio, anyone?

I don’t think anyone will disagree with me when I say that music still represents the most important element in an infotainment system. Just look at the sound system capabilities in new cars. Base systems today have at least 6 speakers, while systems from luxury brands like Audi and BMW boast up to 16 speakers and almost 1000 watts of amplification.

For nearly as long as I can remember, cars have come with CD players. For many years they’ve provided a simple way to take your music on the road. But nothing lasts forever.

Earlier this year, Ford announced it would discontinue CD players in many of its vehicle models. Some industry pundits have predicted that CD players will have no place in cars in model year 2015 and beyond. A few weeks ago, Side-Line Music Magazine reported that major labels plan to abandon the CD format as early as 2012. This revelation has created a flurry of activity on the Net, but the labels have yet to confirm it’s true.

Steady decline

The fact is, CD sales have declined steadily for the last several years, down 16 percent in 2010 alone. Digital downloads (the legal kind), on the other hand, have been growing quickly and are expected to exceed CD sales for the first time in 2012.

Where does that leave us in the car? Obviously, media device integration will be key in the coming years. QNX Software Systems has long supported Apple iPod integration and supported Microsoft’s ill-fated Zune for a while. USB connectivity is a given, and soon you’ll be able to stream music from your phone.

Radio redefined

But what’s more exciting is how radio is evolving in the vehicle. Along with the steering wheel, radio has been a staple of car pretty much since day one. As the connected vehicle moves to the mainstream, internet radio will become a huge part of the automotive experience.

Companies like Slacker extend the concept of radio beyond audio to include artist bios, album art, photos, reviews, and more. Pandora, through its work with the Music Genome Project, expands the musical experience by playing songs it predicts you will appreciate. iHeartRadio aggregates American radio stations for replay throughout the US. TuneIn takes it one further with a global view. Driving down highway 101 in California, you’ll be able to tune in all your favorites from around the world.

Beyond entertainment

These services are changing the way people consume music. Today, I rely on my car radio not only to entertain but also to educate by constantly exposing me to new artists and content. Internet radio in the car will expand my horizons even further. And as online music stores like 7Digital integrate their service with the internet radio stations, I’ll be able to download the song I just heard at the push of a button. Not good for CD sales, but it seems that’s the way of the future anyway.

We are, of course, working with the leaders in internet radio and online music services to bring them to a car near you.

I don’t think anyone will disagree with me when I say that music still represents the most important element in an infotainment system. Just look at the sound system capabilities in new cars. Base systems today have at least 6 speakers, while systems from luxury brands like Audi and BMW boast up to 16 speakers and almost 1000 watts of amplification.

For nearly as long as I can remember, cars have come with CD players. For many years they’ve provided a simple way to take your music on the road. But nothing lasts forever.

Earlier this year, Ford announced it would discontinue CD players in many of its vehicle models. Some industry pundits have predicted that CD players will have no place in cars in model year 2015 and beyond. A few weeks ago, Side-Line Music Magazine reported that major labels plan to abandon the CD format as early as 2012. This revelation has created a flurry of activity on the Net, but the labels have yet to confirm it’s true.

Steady decline

The fact is, CD sales have declined steadily for the last several years, down 16 percent in 2010 alone. Digital downloads (the legal kind), on the other hand, have been growing quickly and are expected to exceed CD sales for the first time in 2012.

Where does that leave us in the car? Obviously, media device integration will be key in the coming years. QNX Software Systems has long supported Apple iPod integration and supported Microsoft’s ill-fated Zune for a while. USB connectivity is a given, and soon you’ll be able to stream music from your phone.

Radio redefined

|

Companies like Slacker extend the concept of radio beyond audio to include artist bios, album art, photos, reviews, and more. Pandora, through its work with the Music Genome Project, expands the musical experience by playing songs it predicts you will appreciate. iHeartRadio aggregates American radio stations for replay throughout the US. TuneIn takes it one further with a global view. Driving down highway 101 in California, you’ll be able to tune in all your favorites from around the world.

Beyond entertainment

These services are changing the way people consume music. Today, I rely on my car radio not only to entertain but also to educate by constantly exposing me to new artists and content. Internet radio in the car will expand my horizons even further. And as online music stores like 7Digital integrate their service with the internet radio stations, I’ll be able to download the song I just heard at the push of a button. Not good for CD sales, but it seems that’s the way of the future anyway.

We are, of course, working with the leaders in internet radio and online music services to bring them to a car near you.

Labels:

Connected Car,

Internet radio,

QNX CAR,

Romain Saha,

Toyota Entune

Monday, November 21, 2011

Pimp your ride with augmented reality — Part I

The use of electronics is exploding in automotive. Just last week, Intel proclaimed that the connected car “is the third-fastest growing technological device, following smartphones and tablets.”

Ten years ago, you’d be hard-pressed to find a 32-bit processor in your car. Now, some cars have 4 or more 32 bitters: one in the radio, another in the telematics module, yet another in the center display, and still another in the rear-seat system.

Heck, in newer cars, you’ll even find one in the digital instrument cluster — the QNX-powered cluster in the Range Rover, for example. Expect to see a similar demand for more compute power in engine control units, drive-by-wire systems, and heads-up displays.

The Range Rover cluster displays virtual speedometers and gauges, as well as warnings, suspension settings, and other info, all on a dynamically configurable display.

What do most of these systems have in common? The need to process tons of information, from both inside and outside of the vehicle, and to present key elements of that data in a safe, contextually relevant, and easy-to-digest fashion.

The next generation of these systems will be built on the following principles:

Augmented reality is a cool use of cameras, GPS, and data to create smart applications that overlay a virtual world on top of the real world. Here are some of my favorite examples:

AR Starbucks cups — Use your phone to make your coffee cup come alive:

AR Starwars — Blast the rebel alliance squirrels!

AR postage stamp — Add a new dimension (literally) to an everyday object:

And here are a couple more for good measure:

AR ray gun — Blast aliens around the house!

Wikitude AR web browser — Explore the world around you while overlaying social networks, images, video, reviews, statistics, etc.

Stay tuned for my next post, where I will explore how AR could enhance the driving experience for both drivers and passengers — Andrew.

Ten years ago, you’d be hard-pressed to find a 32-bit processor in your car. Now, some cars have 4 or more 32 bitters: one in the radio, another in the telematics module, yet another in the center display, and still another in the rear-seat system.

Heck, in newer cars, you’ll even find one in the digital instrument cluster — the QNX-powered cluster in the Range Rover, for example. Expect to see a similar demand for more compute power in engine control units, drive-by-wire systems, and heads-up displays.

The Range Rover cluster displays virtual speedometers and gauges, as well as warnings, suspension settings, and other info, all on a dynamically configurable display.

What do most of these systems have in common? The need to process tons of information, from both inside and outside of the vehicle, and to present key elements of that data in a safe, contextually relevant, and easy-to-digest fashion.

The next generation of these systems will be built on the following principles:

- Fully integrated cockpits — Vehicle manufacturers see system consolidation as a way to cut costs and reduce complexity, as well as to share information between vehicle systems. For instance, your heads-up display could discreetly let you know who is calling you, without forcing you to take your eyes off of the road. And it could do this even if the smarts integrating your phone and your car reside in another cockpit component — the telematics module, say.

- Augmented reality — With all of the data being generated from phones, cloud content services and, perhaps more importantly, the vehicle itself, presenting the right information at the right time in a safe way will become a major challenge. This is where augmented reality comes in.

Augmented reality is a cool use of cameras, GPS, and data to create smart applications that overlay a virtual world on top of the real world. Here are some of my favorite examples:

AR Starbucks cups — Use your phone to make your coffee cup come alive:

AR Starwars — Blast the rebel alliance squirrels!

AR postage stamp — Add a new dimension (literally) to an everyday object:

And here are a couple more for good measure:

AR ray gun — Blast aliens around the house!

Wikitude AR web browser — Explore the world around you while overlaying social networks, images, video, reviews, statistics, etc.

Stay tuned for my next post, where I will explore how AR could enhance the driving experience for both drivers and passengers — Andrew.

Labels:

Andrew Poliak,

Augmented reality,

Connected Car,

Digital instrument clusters,

Driver distraction,

Hands-free systems,

HMIs

Friday, November 18, 2011

I've always wondered about Android support...

My colleague Jeff Schaffer sent me this link, which gives an interesting analysis of Android support on various devices.

Clearly, it's pretty tough to stay on top of the Android release game. One very good reason for car makers to be wary, as they'll be bound to move even slower than handset makers.

Clearly, it's pretty tough to stay on top of the Android release game. One very good reason for car makers to be wary, as they'll be bound to move even slower than handset makers.

Thursday, November 17, 2011

The need for green in automotive

The need for environmentally friendly practices and products has become so painfully obvious in recent years that it’s no longer possible to call it a debate or a controversy. Nowhere is this more conspicuous than in the automotive industry.

The need for environmentally friendly practices and products has become so painfully obvious in recent years that it’s no longer possible to call it a debate or a controversy. Nowhere is this more conspicuous than in the automotive industry.- “The personal automobile is the single greatest polluter as emissions from millions of vehicles on the road add up.”— US Environmental Protection Agency

Working at QNX has given me insight into just how complex the problem is and how going green in automotive is not going to be a revolution. I've come to realize that it will require a good number of players on a large number of fronts.

|

| An example of what happens when your car takes way too long to boot. :-) |

To prevent such undignified delays, these systems typically do not power down completely. Instead, they suspend to RAM while the vehicle is off. This lets the system boot ‘instantly’ whenever the ignition turns over. But because there’s a small current draw to keep RAM alive, this trickle continually drains the battery. This might have minimal consequences today (other than cost to the manufacturer, which is a whole other story) but in the brave new world of electric and hybrid cars, battery capacity equals mileage. Typical systems thus shorten the range of green vehicles and, in the case of hybrids, force drivers to use not-so-green systems more often. More importantly perhaps, these systems give would-be buyers ‘range anxiety’. Indeed, according to the Green Market’s Richard Matthews, battery life is one of the top reasons the current adoption rate is so low.

A little-known feature of QNX technology helps solve this problem.

Architects using the QNX OS can organize the boot process to bring up complex systems in a matter of seconds. Ours is not an all-or-nothing proposition as it is with monolithic operating systems that must load an entire system before anything can run – Windows and Linux are prime examples. QNX supports a gradual phasing in of system functionality to get critical systems up and running while it loads less-essential features in the background. A QNX-based system can start from a cold boot every time. Which means no battery drain while the car is off.

And while this is no giant leap for mankind it is certainly a solid step in the right direction. If the rest of us (consumers, that is) contributed similarly by trading in our clunkers for greener wheels, the industry could undoubtedly move forward in leaps and bounds. I suppose this means I’m going to have to take a long hard look at my 2003 Honda Civic.

Labels:

Fast boot,

Green car,

Infotainment,

Nancy Young,

QNX OS

Monday, November 14, 2011

Can HTML5 keep car infotainment on track?

|

| Paul Leroux |

The rail industry realized long ago that, unless it settled on a standard, costly scenarios like this would repeat themselves ad infinitum. As a result, some 60% of railways worldwide, including those in China, now use standard gauge, ensuring greater interoperability and efficiency.

The in-car infotainment market should take note. It has yet to embrace a standard that would allow in-car systems to interoperate seamlessly with smartphones, tablets, and other mobile devices. Nor has it embraced a standard environment for creating in-car apps and user interfaces.

The in-car infotainment market should take note. It has yet to embrace a standard that would allow in-car systems to interoperate seamlessly with smartphones, tablets, and other mobile devices. Nor has it embraced a standard environment for creating in-car apps and user interfaces. Of course, there are existing solutions for addressing these issues. But that's the problem: multiple solutions, and no accepted standard. And without one, how will cars and mobile devices ever leverage one another out of the box, without a lot of workarounds? And how will automakers ever tap into a (really) large developer community?

No standard means more market fragmentation — and more fragmentation means less efficiency, less interoperability, and less progress overall. Who wants that?

Is HTML5, which is already transforming app development in the desktop, server, and mobile worlds, the standard the car infotainment industry needs? That is one of the questions my colleague, Andy Gryc, will address in his seminar, HTML5 for automotive infotainment: What, why, and how?. The webinar happens tomorrow, November 15. I invite you to check it out.

Labels:

Connected Car,

HMIs,

HTML5,

Mobile connectivity,

Paul Leroux

Thursday, November 10, 2011

Tech-nimble

After working more than 20 years in high tech, I've settled on a mantra: This too shall pass. (Hey, I didn’t say it was original!) To that end, patience is critical, as is flexibility. And ultimately, success depends less on predicting technology trends and more on responding to them. You've got to be tech-nimble, which requires not only the willingness to change, but the technology to accommodate — and profit from — that change.

Yesterday, Adobe announced a restructuring based on a change in direction, from mobile Flash to HTML5. Some might consider this development as proof that Adobe lost the battle to Steve Jobs. But to my mind, they've simply recognized a trend and responded decisively. Adobe has built a product portfolio based heavily on tooling, including tools for HTML5 development. So they definitely fall into the tech-nimble category.

QNX has an even greater responsibility to remain tech-nimble because so many OEMs use our technology as a platform for their products. Our technology decisions have an impact that ripples throughout companies building in-car infotainment units, patient monitoring systems, industrial terminals, and a host of other devices.

So back to the Flash versus HTML 5 debate. QNX is in a great position because our universal application platform approach enables us to support new technologies quickly, with minimal integration effort. This flexibility derives in part from our underlying architecture, which allows OS services to be cleanly separated from the applications that access them.

Today, our platform supports apps based on technologies such as Flash, HTML5, Qt, native C/C++, and OpenGL ES. More to the point, it allows our customers to seamlessly blend apps from multiple environments into a single, unified user experience.

Now that’s tech-nimble.

Yesterday, Adobe announced a restructuring based on a change in direction, from mobile Flash to HTML5. Some might consider this development as proof that Adobe lost the battle to Steve Jobs. But to my mind, they've simply recognized a trend and responded decisively. Adobe has built a product portfolio based heavily on tooling, including tools for HTML5 development. So they definitely fall into the tech-nimble category.

QNX has an even greater responsibility to remain tech-nimble because so many OEMs use our technology as a platform for their products. Our technology decisions have an impact that ripples throughout companies building in-car infotainment units, patient monitoring systems, industrial terminals, and a host of other devices.

So back to the Flash versus HTML 5 debate. QNX is in a great position because our universal application platform approach enables us to support new technologies quickly, with minimal integration effort. This flexibility derives in part from our underlying architecture, which allows OS services to be cleanly separated from the applications that access them.

Today, our platform supports apps based on technologies such as Flash, HTML5, Qt, native C/C++, and OpenGL ES. More to the point, it allows our customers to seamlessly blend apps from multiple environments into a single, unified user experience.

Now that’s tech-nimble.

Wednesday, November 9, 2011

Adobe’s out of mobile? Read the fine print

The blogosphere is a-buzz with Adobe’s apparent decision to abandon Flash in mobile devices. I get the impression, though, that many people haven’t bothered to read Adobe’s announcement. If they did, they would come away with a very different conclusion.

Let me quote what Adobe actually said (emphasis mine):

What’s being discontinued is the Flash plug-in for mobile browsers. Adobe will still support and work on Mobile AIR, and on the development of standalone mobile applications.

A number of cross-platform applications today are implemented in Adobe AIR, and that’s staying the same. Adobe is being smart — they’re picking and choosing their battles, and have decided to give this one to HTML5. We’re big believers in HTML5, and Adobe's announcement makes complete sense: Don’t bother with the burden of Flash plug-in support when you can do it all in the browser. You can still build killer apps using Adobe AIR.

Let me quote what Adobe actually said (emphasis mine):

- "Our future work with Flash on mobile devices will be focused on enabling Flash developers to package native apps with Adobe AIR for all the major app stores. We will no longer adapt Flash Player for mobile devices to new browser, OS version or device configurations. Some of our source code licensees may opt to continue working on and releasing their own implementations. We will continue to support the current Android and PlayBook configurations with critical bug fixes and security updates."

What’s being discontinued is the Flash plug-in for mobile browsers. Adobe will still support and work on Mobile AIR, and on the development of standalone mobile applications.

A number of cross-platform applications today are implemented in Adobe AIR, and that’s staying the same. Adobe is being smart — they’re picking and choosing their battles, and have decided to give this one to HTML5. We’re big believers in HTML5, and Adobe's announcement makes complete sense: Don’t bother with the burden of Flash plug-in support when you can do it all in the browser. You can still build killer apps using Adobe AIR.

A cool and innovative speedometer... for 1939

|

| Paul Leroux |

But how, exactly, do you improve SA? The paper discusses various techniques, and I couldn't possibly do justice to all of them here. But one approach is to supplement the driver's eyes and ears with indicators and warnings, based on information from sensors, roadside systems, and other vehicles.

Here's an example: A system in your car learns, through cloud-based traffic reports, that the road ahead is slick with ice. It also determines that you are driving much too fast for such conditions. The system immediately kicks into action, perhaps by warning you of the icy conditions or by telling you to ease off the accelerator.

Too bad the engineers who designed the 1939 Plymouth P8 didn't have access to such technology. I'm sure they would have embraced it totally.

You see, they too wanted to warn drivers about excess speed. Unfortunately, the technology of the time limited them to creating a primitive, one-size-fits-all solution — the safety speedometer.

Color coded for safety

From what I've read, these speedometers switch from green to amber to red, depending on the car's speed. I've only seen still photos of these speedometers, but allow me to invoke the magic of PhotoShop and reconstruct how I think they work.

The safety speedometer has a rotating bezel, and embedded in this bezel is a small glass bulb. At speeds from 0 to 30 mph, the bulb glows green:

At speeds from 30 to 50 mph, the bulb turns amber:

And at over 60 mph, the bulb turns red:

Given the limitations of 1939 technology, the Plymouth safety speedometer couldn't take driving conditions or the current speed limit into account. It glowed amber at 30 mph, regardless of whether you were cruising through your neighborhood or poking down the highway. As a result, it was more of a novelty than anything else. In fact, I wonder if people driving the car for the first time would have focused more on watching the colors change than on the road ahead. If so, the speedometer may have actually reduce situational awareness. Oops!

Compare this to a software-based digital speedometer, which could take input from multiple sources, both within and outside the car, to provide feedback that dynamically changes with driving conditions. For instance, a digital speedometer could acquire the current speed limit from a navigation database and, if the car is exceeding that limit, remind the driver that they risk a speeding ticket.

That said, I have a soft spot for anyone who is (or was) ahead of their time. Some enterprising Plymouth engineers in the 30s realized that, with faster speeds, comes the need for even greater situational awareness. Their solution was primitive but it offered a hint of what, more than 75 years later, can finally become reality.

Sunday, November 6, 2011

Some people drive me to distraction

|

| Paul Leroux |

When you pan, you never really know what kind of image you're going to get. Often, the results are interesting. And sometimes, they're downright interesting. Take this shot, for example:

Now, holding a cellphone while rocketing down the highway is just plain wrong. To anyone who does it, I have one thing to say: "You're endangering other people's lives for the sake of a f***ing phone call. Where the hell do you get off doing that?"

But look at this guy. He's isn't holding a phone, but a coffee — even worse. Just imagine if he gets into a situation that demands quick, evasive action. He will, in all likelihood, hold on to the cup for fear of burning himself. Whereas if he had a phone, he would simply drop it and put his hand back on the wheel.

Mind you, I have no data to prove that coffee cups poses a greater evil than cellphones. But the core issue remains: Cellphone use is just one of many factors that contribute to driver distraction. In fact, research suggests that cellphones account for only 5% of distraction-related accidents that end in injury.

So, even if every cellphone on the planet disappeared tomorrow, we would still have a massive problem on our hands. To that end, my colleagues Scott Pennock and Andy Gryc suggest a new approach to designing vehicle cockpit systems in their paper, "Situational Awareness: a Holistic Approach to the Driver Distraction Problem."

The paper explores how system designers can use the concept of situational awareness to develop a vehicle cockpit that helps the driver become more aware of objects and events on the road, and that adapts in-vehicle user interfaces to manage the driver’s cognitive load.

It's worth a read. And who knows, perhaps someone, someday, will develop a cockpit system that detects if you are sipping something and tells you what you need to hear: "Dammit Jack, put that cup down. It's not worth endangering other people's lives for the sake of a f***ing latté."

Tuesday, November 1, 2011

Building a hands-free future

The end of my street is governed by a three-way stop. The other morning I was backing out of my driveway when someone rolled past the stop sign and came within inches of hitting me. I stopped, glared at him, and resumed driving. Two stop signs later, the same guy squeezed past my car (in the same lane), completely oblivious to what he was doing.

Why was he driving like this? Probably because he was deeply engrossed in a conversation on his cell phone.

Where I live, using a handset while driving has been illegal for over a year. You cannot talk, you cannot text, you cannot “Facebook”, you cannot Tweet — even if you're stopped at a red light. This makes perfect sense to me. As a driver, your primary responsibility is to control the vehicle. And yet I see people texting on the freeway, talking on their cell phones, and doing who knows what else on an alarmingly regular basis.

Society has become obsessed with mobile devices, and it will take more than legislation to change its behavior. The answer, I think, is to embrace the behavior in a way that makes it possible to interact socially while maintaining control of the car. We’ve seen great progress in hands-free/phone integration, and BMW ConnectedDrive offers an example of how drivers can access email and other smartphone services more safely.

This is the tip of the iceberg. Integrating the handset with the infotainment unit is going to change the way you interact with your car. Intelligently designed apps, combined with multi-modal human machine interfaces, will let you Tweet or update Facebook using speech recognition, keeping your eyes on the road.

Without taking your hands of the wheel, you’ll be able to call a friend and decide that you want to go to dinner, do a local search to find out what’s available, check a restaurant review on Yelp, make a reservation, text your friend back with the time and place, and aim your navigation system at the restaurant. And you’ll be able to do it using natural language. None of this “please say a name” stuff.

Seems futuristic? It’s not. People are working on it today. In fact, QNX-based systems, such as Toyota Entune, already offer a taste of this hands-free and highly personalized future.

Why was he driving like this? Probably because he was deeply engrossed in a conversation on his cell phone.

Where I live, using a handset while driving has been illegal for over a year. You cannot talk, you cannot text, you cannot “Facebook”, you cannot Tweet — even if you're stopped at a red light. This makes perfect sense to me. As a driver, your primary responsibility is to control the vehicle. And yet I see people texting on the freeway, talking on their cell phones, and doing who knows what else on an alarmingly regular basis.

|

| The QNX-powered BMW ConnectedDrive system |

This is the tip of the iceberg. Integrating the handset with the infotainment unit is going to change the way you interact with your car. Intelligently designed apps, combined with multi-modal human machine interfaces, will let you Tweet or update Facebook using speech recognition, keeping your eyes on the road.

Without taking your hands of the wheel, you’ll be able to call a friend and decide that you want to go to dinner, do a local search to find out what’s available, check a restaurant review on Yelp, make a reservation, text your friend back with the time and place, and aim your navigation system at the restaurant. And you’ll be able to do it using natural language. None of this “please say a name” stuff.

Seems futuristic? It’s not. People are working on it today. In fact, QNX-based systems, such as Toyota Entune, already offer a taste of this hands-free and highly personalized future.

Labels:

BMW,

Driver distraction,

Hands-free systems,

Romain Saha,

Smartphones,

Speech interfaces,

Toyota Entune

Sunday, October 30, 2011

Speech interfaces: UI revolution or intelligent evolution?

Speech interfaces have received a lot of attention recently, especially with the marketing blitz for Siri, the new speech interface for the iPhone.

Speech interfaces have received a lot of attention recently, especially with the marketing blitz for Siri, the new speech interface for the iPhone.After watching some of the TV commercials you might conclude that you can simply talk to your phone as if it were your friend, and it will figure out what you want. For example, in one scenario the actor asks the phone, “Do I need a raincoat?”, and the phone responds with weather information.

A colleague commented that if he wanted weather information he would just ask for it. As in “What is the weather going to be like in Seattle?” or “Is it going to rain in Seattle?”.

Without more conversational context, if a friend were to ask me, “Do I need a raincoat?”, I would probably respond, “I don’t know, do you?” — jokingly, of course.

Evo or revo?

|

| Are we ready to converse with our phones and cars? |

Possibly. But I think it will be more of a UI evolution than a UI revolution. In other words, speech interfaces will play a bigger role in UI designs, but that doesn't mean you're about to start talking to your phone — or any other device — as if it’s your best friend.

Currently, speech interfaces are underutilized. The reasons for this aren't yet clear, though they seem to encompass both technical and user issues. Traditionally, speech recognition accuracy rates have been less than perfect. Poor user interface design (for instance, reprompting strategies) has contributed to the overall problem and to increased user frustration.

Also, people simply aren't used to speech interfaces. For example, many phones support voice-dialing, yet most people don't use this feature. And user interface designers seem reluctant to leverage speech interfaces, possibly because of the additional cost and complexity, lack of awareness, or some other reason.

to a suboptimal user experience..."

As a further complication, relying heavily on speech as an interface can lead to a suboptimal user experience. Speech interfaces pose some real challenges, including recognition accuracy rates, natural language understanding, error recovery dialogs, UI design, and testing. They aren't the flawless wonders that some marketers would lead you to believe.

Still, I believe there is a happy medium for leveraging speech interfaces as part of a multi-modal interface — one that uses speech as an interface where it makes sense. Some tasks are better suited for a speech interface, while others are not. For example, speech provides an ideal way to provide input to an application when you can capitalize on information stored in the user’s head. But it’s much less successful when dealing with large lists of unfamiliar items.

Talkin' to your ride

Other factors, besides Apple, are driving the growing role of speech interfaces — particularly in automotive. Speech interfaces can, for example, help address the issue of driver distraction. They allow drivers to keep their “eyes on the road and hands on the wheel,” to quote an oft-used phrase.

So, will we see a paradigm shift towards speech interfaces? It's unlikely. I'm hoping, though, that we'll see a UI evolution that makes better use of them.

Think of it more as a paradigm nudge than a paradigm shift.

Recommended reading

Situation Awareness: a Holistic Approach to the Driver Distraction Problem

Wideband Speech Communications for Automotive: the Good, the Bad, and the Ugly

Labels:

Driver distraction,

HMIs,

Scott Pennock,

Smartphones,

Speech interfaces

Thursday, October 27, 2011

Enabling the next generation of cool

Capturing QNX presence in automotive can’t be done IMHO without a nod to our experience in other markets. Take, for example, the extreme reliability required for the International Space Station and the Space Shuttle. This is the selfsame reliability that automakers rely on when building digital instrument clusters that cannot fail. Same goes for the impressive graphics on the BlackBerry Playbook. As a result, Tier1s and OEMs can now bring consumer-level functionality into the vehicle.

Multicore is another example. The automotive market is just starting to take note while QNX has been enabling multi-processing for more than 25 years.

So I figure that keeping our hand in other industries means we actually have more to offer than other vendors who specialize.

I tried to capture this in a short video. It had to be done overnight so it’s a bit of a throw-away but (of course) I'd like to think it works. :-)

Multicore is another example. The automotive market is just starting to take note while QNX has been enabling multi-processing for more than 25 years.

So I figure that keeping our hand in other industries means we actually have more to offer than other vendors who specialize.

I tried to capture this in a short video. It had to be done overnight so it’s a bit of a throw-away but (of course) I'd like to think it works. :-)

Tuesday, October 25, 2011

QNX and Freescale talk future of in-car infotainment

|

| Paul Leroux |

If you've read any of my blog posts on the QNX concept car (see here, here, and here), you've seen an example of how mixing QNX and Freescale technologies can yield some very cool results.

So it's no surprise that when Jennifer Hesse of Embedded Computing Design wanted to publish an article on the challenges of in-car infotainment, she approached both companies. The resulting interview, which features Andy Gryc of QNX and Paul Sykes of Freescale, runs the gamut — from mobile-device integration and multicore processors to graphical user interfaces and upgradeable architectures. You can read it here.

Labels:

Infotainment,

Mobile connectivity,

Paul Leroux

Monday, October 24, 2011

When will I get apps in my car?

I read the other day that Samsung’s TV application store has surpassed 10 million app downloads. That got me thinking: When will the 10 millionth app download occur in the auto industry as a whole? (Let’s not even consider 10 million apps for a single automaker.)

There’s been much talk about the car as the fourth screen in a person’s connected life, behind the TV, computer, and smartphone. The car rates so high because of the large amount of time people spend in it. While driving to work, you may want to listen to your personal flavor of news, listen to critical email through a safe, text-to-speech email reader, or get up to speed on your daily schedule. When returning home, you likely want to unwind by tapping into your favorite online music service. Given the current norm of using apps to access online content (even if the apps are a thin disguise for a web browser), this begs the question — when can I get apps in my car?

A few automotive examples exist today, such as GM MyLink, Ford Sync, and Toyota Entune. But app deployment to vehicles is still in its infancy. What conditions, then, must exist for apps to flourish in cars? A few stand out:

Cars need to be upgradeable to accept new applications — This is a no-brainer. However, recognizing that the lifespan of a car is 10+ years, it would seem that a thin client application strategy is appropriate.

Established rules and best practices to reduce driver distraction — These must be made available to, and understood by, the development community. Remember that people drive cars at high speeds and cannot fiddle with unintuitive, hard-to-manipulate controls. Apps that consumers can use while driving will become the most popular. Apps that can be used only when the car is stopped will hold little appeal.

A large, unfragmented platform to attract a development community — Developers are more willing to create apps for a platform when they don't have to create multiple variants. That's why Apple maintains a consistent development environment and Google/Android tries to prevent fragmentation. Problem is, fragmentation could occur almost overnight in the automotive industry — imagine 10 different automakers with 10 different brands, each wanting a branded experience. To combat this, a common set of technologies for connected automotive application development (think web technologies) is essential. Current efforts to bring applications into cars all rely on proprietary SDKs, ensuring fragmentation.

Other barriers undoubtedly exist, but these are the most obvious.

By the way, don’t ask me for my prediction of when the 10 millionth app will ship in auto. There’s lots of work to be done first.

There’s been much talk about the car as the fourth screen in a person’s connected life, behind the TV, computer, and smartphone. The car rates so high because of the large amount of time people spend in it. While driving to work, you may want to listen to your personal flavor of news, listen to critical email through a safe, text-to-speech email reader, or get up to speed on your daily schedule. When returning home, you likely want to unwind by tapping into your favorite online music service. Given the current norm of using apps to access online content (even if the apps are a thin disguise for a web browser), this begs the question — when can I get apps in my car?

|

| Entune takes a hands-free approach to accessing apps. |

Cars need to be upgradeable to accept new applications — This is a no-brainer. However, recognizing that the lifespan of a car is 10+ years, it would seem that a thin client application strategy is appropriate.

Established rules and best practices to reduce driver distraction — These must be made available to, and understood by, the development community. Remember that people drive cars at high speeds and cannot fiddle with unintuitive, hard-to-manipulate controls. Apps that consumers can use while driving will become the most popular. Apps that can be used only when the car is stopped will hold little appeal.

A large, unfragmented platform to attract a development community — Developers are more willing to create apps for a platform when they don't have to create multiple variants. That's why Apple maintains a consistent development environment and Google/Android tries to prevent fragmentation. Problem is, fragmentation could occur almost overnight in the automotive industry — imagine 10 different automakers with 10 different brands, each wanting a branded experience. To combat this, a common set of technologies for connected automotive application development (think web technologies) is essential. Current efforts to bring applications into cars all rely on proprietary SDKs, ensuring fragmentation.

Other barriers undoubtedly exist, but these are the most obvious.

By the way, don’t ask me for my prediction of when the 10 millionth app will ship in auto. There’s lots of work to be done first.

Labels:

Apps,

Connected Car,

Driver distraction,

GM MyLink,

Kerry Johnson,

Toyota Entune

Wednesday, October 19, 2011

Marking over 5 years of putting HTML in production cars

Think back to when you realized the Internet was reaching beyond the desktop. Or better yet, when you realized it would touch every facet of your life. If you haven’t had that second revelation yet, perhaps you should read my post about the Twittering toilet.

Think back to when you realized the Internet was reaching beyond the desktop. Or better yet, when you realized it would touch every facet of your life. If you haven’t had that second revelation yet, perhaps you should read my post about the Twittering toilet.For me, the realization occurred 11 years ago, when I signed up with QNX Software Systems. QNX was already connecting devices to the web, using technology that was light years ahead of anything else on the market. For instance, in the late 90s, QNX engineers created the “QNX 1.44M Floppy,” a self-booting promotional diskette that showcased how the QNX OS could deliver a complete web experience in a tiny footprint. It was an enormous hit, with more than 1 million downloads.

|

| Embedding the web, dot com style: The QNX-powered Audrey |

At the time, Don Fotsch, one of Audrey’s creators, coined the term “Internet Snacking” to describe the device’s browsing environment. The dot com crash in 2001 cut Audrey’s life short, but QNX maintained its focus on enabling a rich Internet experience in embedded devices, particularly those within the car.

The point of these stories is simple: Embedding the web is part of the QNX DNA. At one point, we even had multiple browser engines in production vehicles, including the Access Netfront engine, the QNX Voyager engine, and the OpenWave WAP Browser. In fact, we have had cars on the road with Web technologies since model year 2006.

With that pedigree in enabling HTML in automotive, we continue to push the envelope. We already enable unlimited web access with full browsers in BMW and other vehicles, but HTML in automotive is changing from a pure browsing experience to a full user experience encompassing applications and HMIs. With HTML5, this experience extends even to speech recognition, AV entertainment, rich animations, and full application environments — Angry Birds anyone?

People often now talk about “App Snacking,” but in the next phase of HTML 5 in the car, it will be "What’s for dinner?”!

Labels:

Andrew Poliak,

HMIs,

HTML5,

Infotainment,

QNX OS

Tuesday, October 18, 2011

BBDevCon — Apps on BlackBerry couldn't be better

Unfortunately I joined the BBDevCon live broadcast a little too late to capture some of the absolutely amazing TAT Cascades video. RIM announced that TAT will be fully supported as a new HMI framework on BBX (yes, the new name of QNX OS for PlayBook and phones has been officially announced now). The video was mesmerizing — a picture album with slightly folded pictures falling down in an array, shaded and lit, with tags flying in from the side. It looked absolutely amazing, and it was created with simple code that configured the TAT framework "list" class with some standard properties. And there was another very cool TAT demo that showed an email filter with an active touch mesh, letting you filter your email in a very visual way. Super cool looking.

HTML5 support is huge, too — RIM has had WebWorks and Torch for a while, but their importance continues to grow. HTML5 apps provide the way to unify older BB devices and any of the new BBX-based PlayBooks and phones. That's a beautiful tie-in to automotive, where we're building our next generation QNX CAR software using HTML5. The same apps running on desktops, phones, tablets, and cars? And on every mobile device, not just one flavor like iOS or Android? Sounds like the winning technology to me.

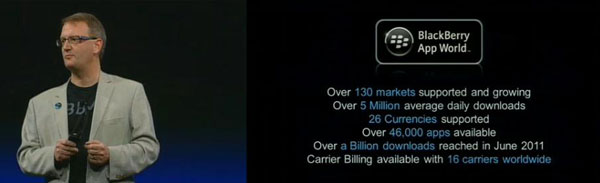

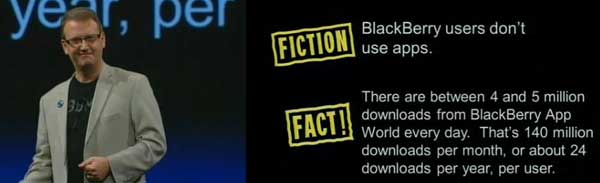

Finally, they talked about the success of App World. There were some really nice facts to constrast with the negative press RIM has received on "apps". First some interesting comparisons: 1% of Apple developers made more than $1000, but 13% of BlackBerry developers made more than $100,000. Whoa. And that App World generates the 2nd most amount of money — more than Android. Also very interesting!

I can't do better than the presenters, so I'll finish up with some pics for the rest of the stats...

HTML5 support is huge, too — RIM has had WebWorks and Torch for a while, but their importance continues to grow. HTML5 apps provide the way to unify older BB devices and any of the new BBX-based PlayBooks and phones. That's a beautiful tie-in to automotive, where we're building our next generation QNX CAR software using HTML5. The same apps running on desktops, phones, tablets, and cars? And on every mobile device, not just one flavor like iOS or Android? Sounds like the winning technology to me.

Finally, they talked about the success of App World. There were some really nice facts to constrast with the negative press RIM has received on "apps". First some interesting comparisons: 1% of Apple developers made more than $1000, but 13% of BlackBerry developers made more than $100,000. Whoa. And that App World generates the 2nd most amount of money — more than Android. Also very interesting!

I can't do better than the presenters, so I'll finish up with some pics for the rest of the stats...

Labels:

Andy Gryc,

App World,

BlackBerry,

HMIs,

HTML5,

QNX CAR,

Smartphones,

Tablets

New release of QNX acoustic processing suite means less noise, less tuning for hands-free systems

|

| Paul Leroux |

The suite, used by 18 automakers on over 100 vehicle platforms, provides modules for both the receive side and the send side of hands-free calls. The modules include acoustic echo cancellation, noise reduction, wind blocking, dynamic parametric equalization, bandwidth extension, high frequency encoding, and many others. Together, they enable high-quality voice communication, even in a noisy automotive interior.

Highlights of version 2.0 include:

Enhanced noise reduction — Minimizes audio distortions and significantly improves call clarity. Can also reconstruct speech masked by low-frequency road and engine noise.

Automatic delay calculation and compensation — Eliminates almost all product tuning, enabling automakers to save significant deployment time and expense.

Off-Axis noise rejection — Rejects sound not directly in front of a microphone or speaker, allowing dual-microphone solutions to hone in on the person speaking for greater intelligibility.

To read the press release, click here. To learn more about the acoustic processing suite, visit the QNX website.

The QNX Aviage Acoustic Processing Suite can run on the general purpose processor,

saving the cost of a DSP.

Labels:

Acoustic processing,

Hands-free systems,

Paul Leroux

Subscribe to:

Comments (Atom)