Technology in cars has been advancing at an impressive rate. From rich infotainment systems to intelligent digital instrument clusters, today’s automobile has evolved to become a cool reality that many of us only envisioned as a possibility a few years ago. But while the technology has changed, the driver has stayed the same. Drivers still need to get from point A to point B as efficiently and safely as possible, while perhaps listening to some favorite road trip tunes on the journey.

What has changed for drivers is the sheer volume of information that is available while behind the wheel. Today’s vehicle can tell you more than the fact that you are desperately in need of finding the nearest gas station. It’s smart enough to let you know when you are getting close to hitting the neighbor’s garbage can… again. It can alert you to traffic pattern changes, road hazards, inclement weather, your affinity to your lead foot, and to the fact that your spouse is texting you to remind you to pick up the dry cleaning. It can also effortlessly re-route you back to the dry cleaners after you realize you’ve forgotten, providing you with helpful turn-by-turn navigation in your instrument cluster.

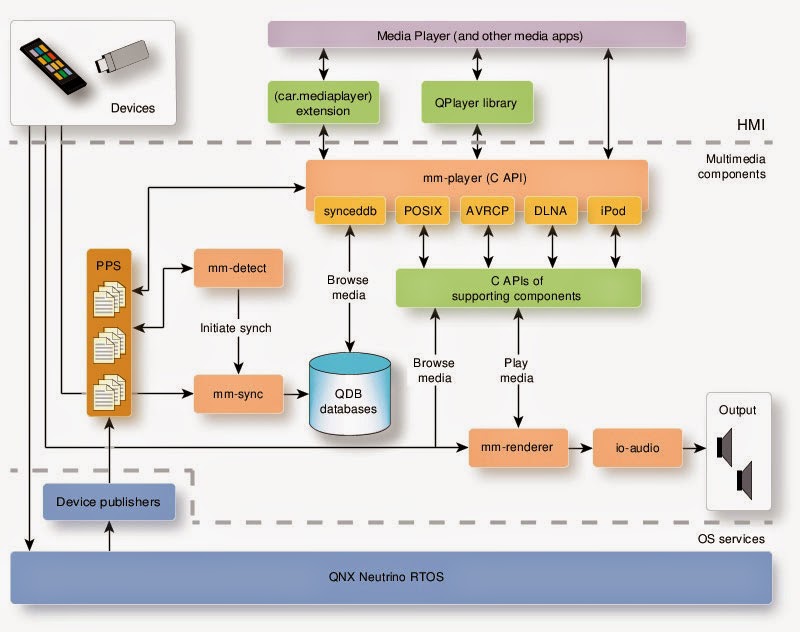

That’s a lot of information. And it’s only a small slice of what’s available to today’s driver. The simplicity, reliability, and safety capabilities of platforms by QNX Software Systems make it a possible to have a wide range of technologies and features in a single vehicle, offering up an abundance of data for driver consumption.

So, how do we make this data useful for drivers? What do we need to consider when designing the UI for digital instrument clusters?

How much information does the driver REALLY need?

Information should be helpful, not intrusive or distracting from the task at hand — driving. The point of having more data available to drivers isn’t to show it all at the same time. That’s visually noisy and complex. Complex isn’t better; context is better. Turn-by-turn information can be displayed in the instrument cluster, based on communication from the navigation system. Video of the car’s surroundings can be displayed when parking assist services are engaged. Advanced Driver Assistance Systems (ADAS) can present in the cluster alerts to immediate hazards and objects.

Using tools that support rapid prototyping of design scenarios empowers teams to deliver the best user experience possible, serving up only the most relevant information. Using Storyboard Suite from Crank Software, teams can quickly cycle through design prototypes and perform testing on real hardware, focusing on the needs of the driver.

How do we best visualize the data?

It’s critical that drivers see and interpret displayed information as easily and quickly as possible. Clear visual representation of data is required, so it’s important to keep design considerations at the forefront in the development process. This is where the graphic designer comes in.

Crank Software’s Storyboard Suite allows the graphic designer to be integrated into the development process from concept to final HMI delivery, working in parallel with the engineers to ensure that fine details and subtle design nuances aren’t lost. With Storyboard Suite, designers don’t hand over a mockup to a developer to visually represent with code and then walk away. As the graphics change and evolve to satisfy usability requirements, the designer stays engaged throughout the entire process, helping to deliver a polished HMI.

|

| Automotive cluster designed and developed with Crank Software Storyboard Suite, running on QNX Neutrino OS |

Can we respond quickly to design change?

Remaining focused on the usability of the end design is critical to ensuring the safest driving experience. Delivering a high-performance, user-centric HMI requires testing, design refinements, retesting, and even further changes. This isn’t a linear process. While iterative process is important, it’s often cost prohibitive because it can introduce lengthy redesign cycles. Storyboard Suite provides teams the functionality to prototype and iterate through designs easily, using features such as Photoshop Re-import to quickly evaluate design changes on hardware and shorten development cycles. In addition, support for collaboration enables teams to share design and development work, thereby reducing the load on individuals and further optimizing time and resources.

A faster development process coupled with a user-focused end design is the key to delivering a highly usable and safe digital instrument cluster to market on schedule and within budget.

A digital instrument cluster developed with Storyboard Suite will be on display at TU-Automotive Detroit in the QNX Software Systems booth, #C92, and the Crank Software booth, #C113. Check out a previous Crank Software and QNX Software Systems collaboration with a Storyboard Suite UI in a QNX technology concept car.

Jason Clarke has over 15 years of experience in the embedded industry, in roles that span development, sales, and marketing. Jason heads up Crank Software’s marketing and sales initiatives.

Visit Crank Software here.